The RAGTruth hallucination corpus was originally developed to provide a benchmark to “measure the extent of hallucination.” However, the founder of RAGFix used the RAGTruth corpus in a novel way. Instead of using it to development a method of hallucination measurement, he used it to demonstrate his unique method of hallucination elimination instead.

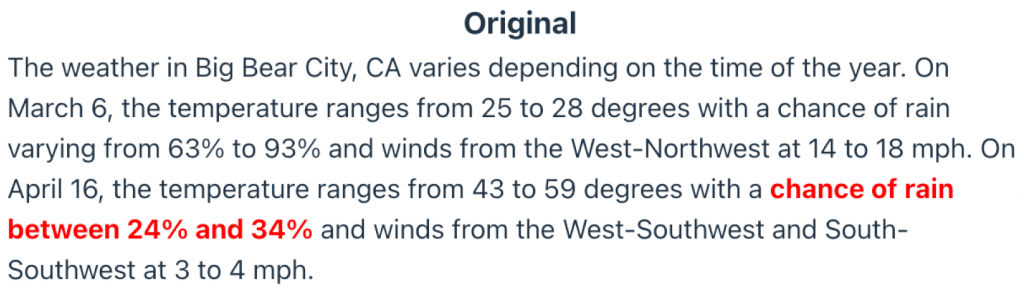

For example, RAGTruth recorded the following hallucination on ChatGPT 4 for the following query: “weather in big bear city ca”:

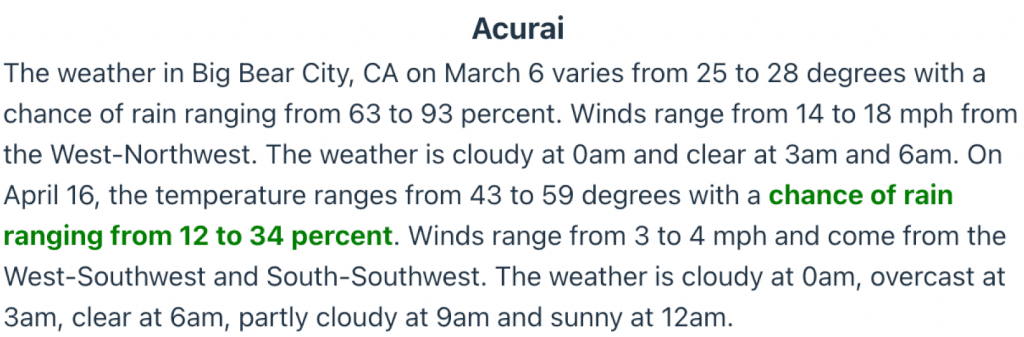

As the RAGTruth annotators wrote, according to the source material, “the range should be 12% to 34%.” The RAGTruth researchers used the gpt-4-0613 model with a temperature of 0.7. To demonstrate RAGFix, the same query was sent to the same model using the same temperature. However, the source material was converted into Fully-Formatted Facts (to allow the model to better understand and parse the information). Converting the source material to Fully-Formatted Facts eliminated the hallucination:

“Acurai” is the founder’s term for 100% Accurate AI. The key to achieving Acurai is to send Fully-Formatted Facts to the LLM—not slices of documents as is done in traditional RAG. The truth of this statement is demonstrated in the elimination of all hallucinations in the RAGTruth Corpus for ChatGPT 4 in the QA task set.

Using the RAGFix Hallucination Analyzer you can view all GPT QA hallucinations for both Subtle and Evident Conflicts. You can also view the 100% accurate responses RAGFix provides using the same query, the same model, and the same temperature. This fully demonstrates that hallucinations can be eliminated by transforming the source material into Fully-Formatted Facts.

Fully-Formatted Facts are the holy grail of AI for the task of extractive QA. For the first time, extractive QA chatbots can finally deliver 100% accurate responses.

Comments are closed.