The founder of RAGFix discovered the actual cause of hallucinations—and it’s very different from what the industry has believed. Fortunately, the underlying cause of hallucinations can be verified by anyone; once they know where to look.

Anyone can confirm the cause of hallucinations by performing the following three steps on any RAG-based erroneous reponse:

- Identify the first wrong statement in the output.

- Find the corresponding noun phrase in the output window that preceded the hallucination.

- Go to the source material and see that the noun phrase produced the errant “route” (the words that formed the basis of the hallucination).

The founder of RAGFix performed such an analysis on hundreds of hallucinations. Every single hallucination traced back to this one singular cause.

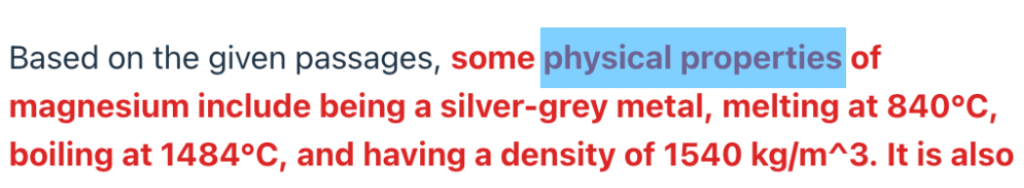

Let’s perform the simple analysis on the following hallucination contained in the RAGTruth hallucination corpus: “Based on the given passages, some physical properties of magnesium include being a silver-grey metal, melting at 840°C, boiling at 1484°C…” The text in red is a hallucination. So let’s apply the three steps above to identify the actual cause of the error.

Prior to the hallucination, the only text in the output window was: “Based on the given passages, some physical properties of magnesium include.” Thus, the corresponding noun-phrase prior to the error is “physical properties”:

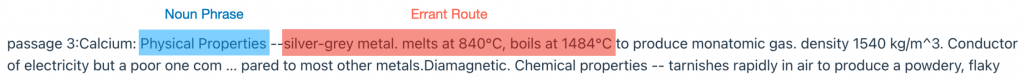

So now lets look at the source material to see if the Noun Phrase is the cause of an erroneous route and then label the noun-phrase and erroneous route:

Notice that the noun phrase “Physical Properties” caused the LLM to go down an errant route. Importantly, notice that the source material specifically labels the information as being the physical properties of calcium. GPT 3.5 Turbo ignored the context. The noun-phrase match caused it to go down the wrong route. Noun-phrase matching overrides context. And so it is every single time.

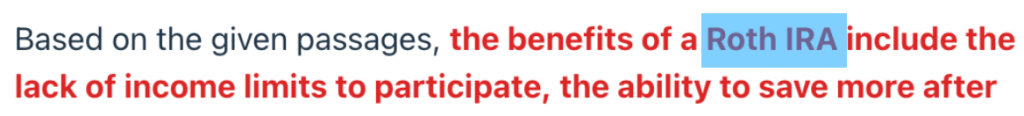

As another case in point, consider another example from the RAGTruth hallucination corpus: “Based on the given passages, the benefits of a Roth IRA include the lack of income limits to participate…” The text in red is a hallucination. According to the source materials, it’s a Roth 401(k) that includes the lack of income limits to participate.

So what caused the hallucination, even though the LLM was given the correct information? Prior to the hallucination, the only text in the output window was: “Based on the given passages, the benefits of a Roth IRA.” Hence, “Roth IRA” is the corresponding noun phrase:

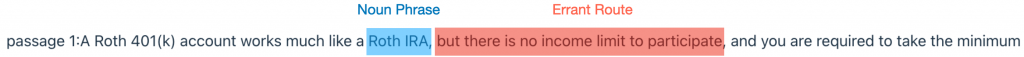

Once again, by looking at the source material we can see that ChatGPT matched on the noun phrase and then traversed an errant route:

Notice that ChatGPT did not parse the sentence correctly. It simply matched the noun phrase and then went down the errant route.

Contrary to Popular Belief LLMs Do Not “Understand” Context

LLMs are machine learning models. All machine learning models are sophisticated pattern matchers. The pattern matching creates the illusion of understanding. This can be fully confirmed by repeatedly performing the same simple three steps on dozens of hallucinations. The result will always be the same provided you are using an LLM that does not inject parametric knowledge.

Parametric knowledge refers to knowledge learned during training. If you are using a sophisticated LLM that can constrain itself to the provided source material then you will find that every hallucination comes from a single cause: Noun-Phrase Collisions.

For the first time in history, there are finally LLMs that are capable of confining their responses to the provided text. The final key to 100% accuracy is to transform the prompts and source material into Fully-Formatted Facts to eliminate the root cause of hallucinations.

Fully-Formatted Facts

Fully-Formatted Facts contain three properties.

The first property is that all statements contain simple routes. Notice how both hallucinations involved source material with relatively complex routes. Thus, the information needs to be rewritten so that all noun-phrases correspond to easily identifiable routes. Combined with the first principle, we now have all noun-phrases corresponding to easily identifiable routes that are inherently true within themselves.

The second property is that each individual statement is true in itself. In other words, no context is needed to “understand” the truth. For example, consider a news article published many years ago. This article states: “George Bush is the president of the United States.” That statement is not independently true on its own. Therefore, to fulfill the first property of a Fully-Formatted Fact, an absolute date would need to be added to the statement (e.g. the date of the article would be added to every statement that would change over time).

The third property is that noun phrase collisions must be removed before querying the LLM and then added back in during post processing. Consider the hallucination regarding the physical properties of magnesium. If the source texts contain the “physical properties” of both magnesium and calcium then the LLM can conflate the physical properties of one metal for another. In fact, that’s exactly what happened. Therefore, all potential conflicting noun phrases need to be stripped prior to the LLM query. The noun phrases can then be reconstituted during post processing.

For a more detailed explanation on how Fully-Formatted Facts produce 100% accurate responses, see “SYSTEM AND METHOD FOR ACCURATE AI CONTENT GENERATION.”

RAGFix Endpoints

RAGFix makes it easy to implement the three properties of Fully-Formatted Facts. In fact, RAGFix contains one REST POST endpoint for each of the three properties:

- /simplify-routes: This endpoint is essential for all source material. This endpoint uses multiple fine-tuned AI models plus Natural Language Processing models to rewrite the source material in a manner in which all noun phrases are accompanied by easily identifiable routes.

- /time-adjustment: Use this endpoint for any source material involving current events (such as press releases, news articles, social media posts, etc.). This endpoint timestamps any statement that: 1) is in the present tense and is temporary; 2) is in the future tense; or 3) contains a relative date reference (e.g. “two days ago”). This endpoint allows press releases, news articles, social media posts, and more to fulfill the first property of Fully-Formatted Facts.

- /generate-response: This endpoint is where the user’s query and the simplified routes are sent to. This endpoint uses multiple AI, ML, and NLP models to rewrite the combined source materials in a manner that is devoid of noun-phrase collisions; sends the prompt and rewritten sources to the LLM; and then reconstitutes the proper wording during post processing.

The RAGFix endpoints are easily added to any RAG workflow, finally making 100% accurate AI available to all.

Comments are closed.